Blog

Articles about computational science and data science, neuroscience, and open source solutions. Personal stories are filed under Weekend Stories. Browse all topics here. All posts are CC BY-NC-SA licensed unless otherwise stated. Feel free to share, remix, and adapt the content as long as you give appropriate credit and distribute your contributions under the same license.

tags · RSS · Mastodon · simple view · page 6/16

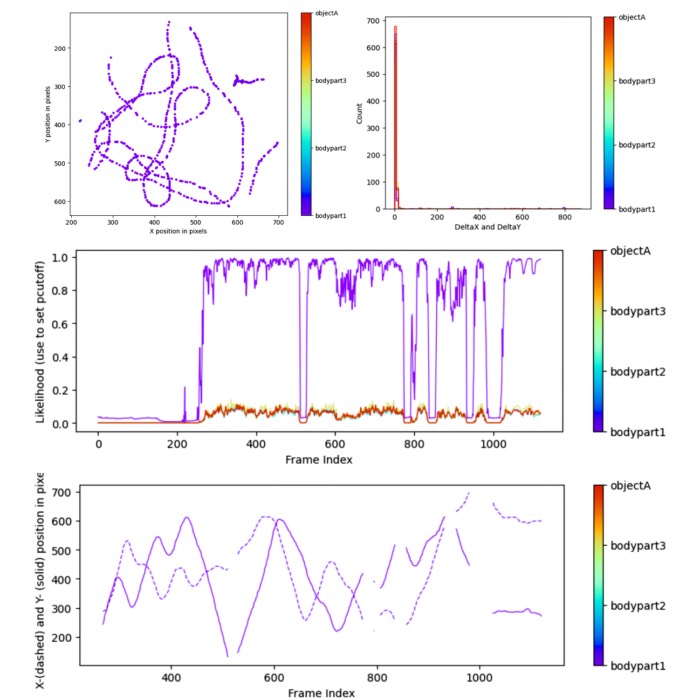

Assessing animal behavior with machine learning: New DeepLabCut tutorial

I have added a hands-on tutorial to the Assessing Animal Behavior lecture. The tutorial covers the GUI-based use of DeepLabCut, a popular open-source software package for markerless pose estimation of animals. The target group is neuroscience students with no or little programming knowledge. Feel free to share the tutorial with students or colleagues who might be interested in using DeepLabCut for their own projects.

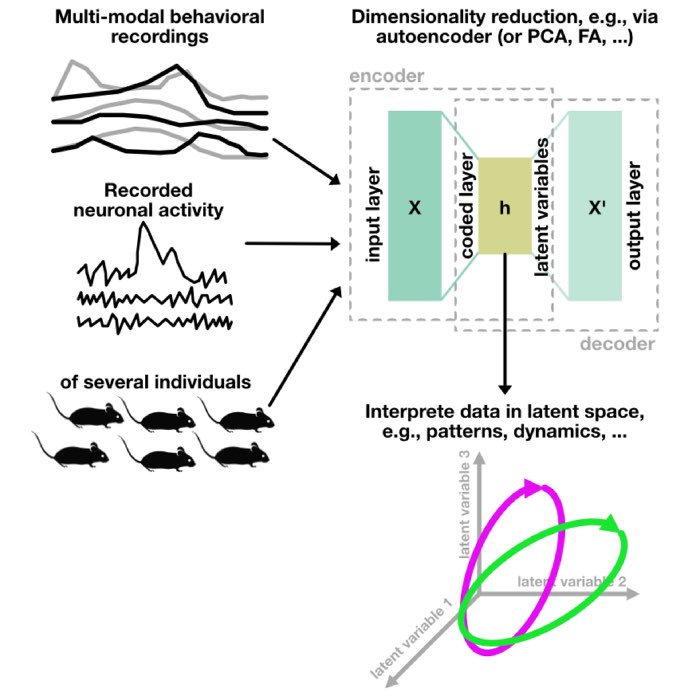

Assessing animal behavior with machine learning

High-throughput and multi-modal behavior experiments, coupled with machine learning analysis, unlock valuable insights into complex systems by capturing diverse behavioral responses and deciphering hidden structures within high-dimensional datasets. I just completed a short introductory lecture on this topic, which is now available in the Teachings section.

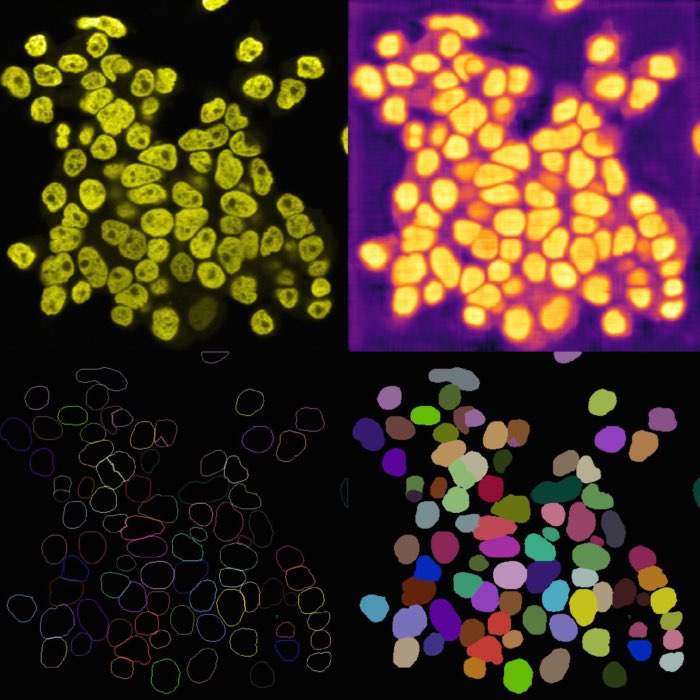

Bioimage analysis with Napari

I’ve added new teaching material on using the free and open-source software (FOSS) Napari for bioimage analysis. Feel free to use and share it.

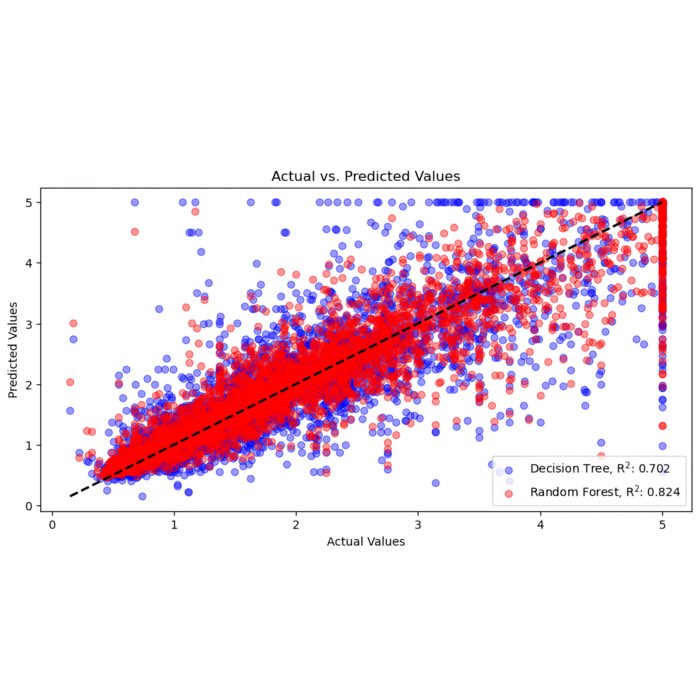

Decision Trees vs. Random Forests for classification and regression: A comparison

Decision trees and random forests are popular machine learning algorithms that are widely used for both classification and regression tasks. In this blog post, we elucidate their theoretical foundations and discuss the differences as well as their advantages and drawbacks.

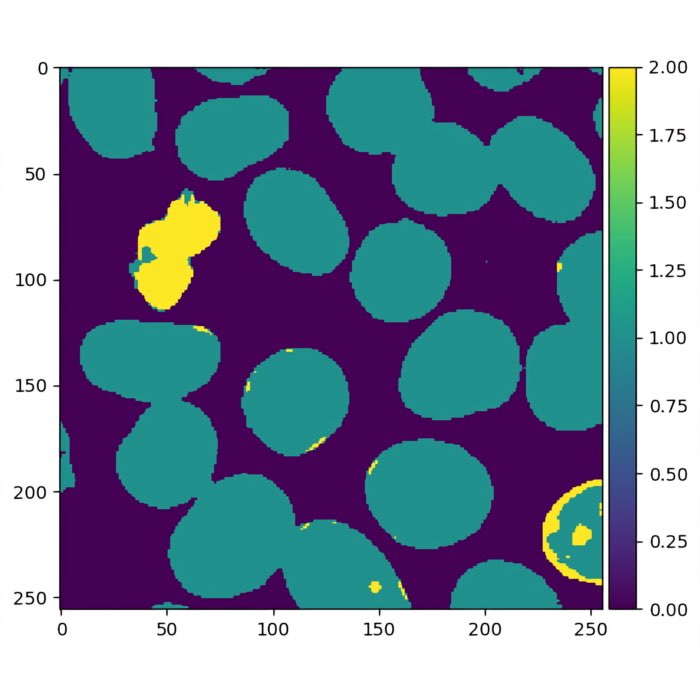

Using random forests for pixel classification

Beyond traditional classification problems, random forests have proven their effectiveness in pixel classification. In this post, we will delve into this domain and explore how random forests can be effectively utilized to tackle the task of pixel classification.

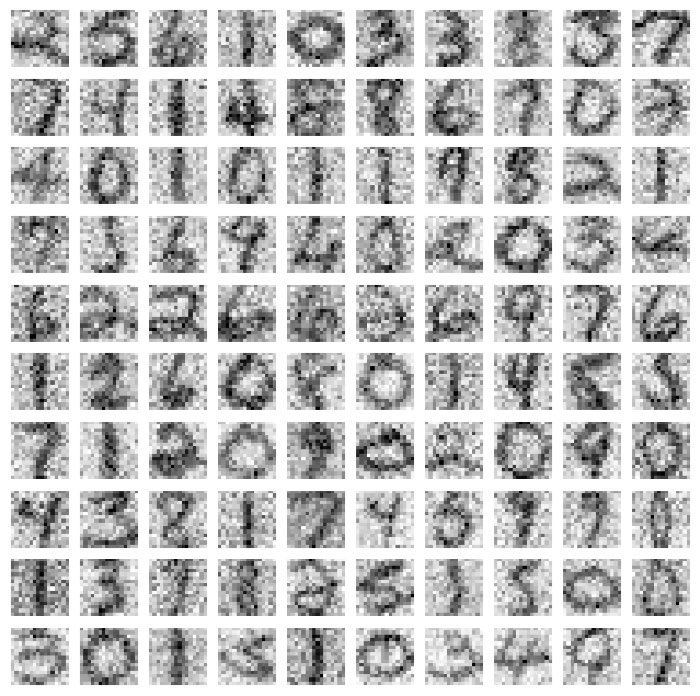

Image denoising techniques: A comparison of PCA, kernel PCA, autoencoder, and CNN

In this post, we explore the performance of PCA, Kernel PCA, denoising autoencoder, and CNN for image denoising.

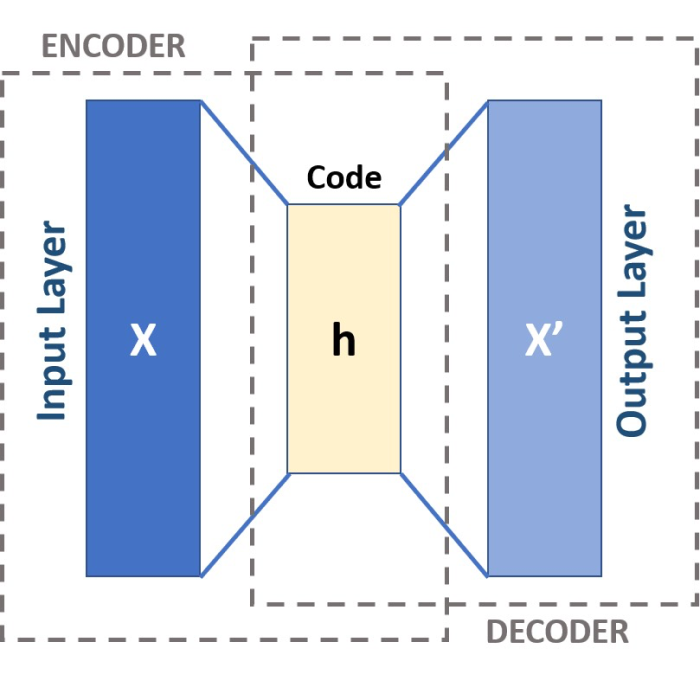

Using Autoencoders to reveal hidden structures in high-dimensional data

In this Python tutorial, we explore the application of Autoencoders for dimensionality reduction, demonstrating how this powerful technique can help us uncover and interpret hidden patterns within our data.

Unlocking hidden patterns with Factor Analysis

In this Python tutorial, we dive into Factor Analysis, a powerful statistical method used to uncover hidden, or ‘latent,’ variables within high-dimensional datasets. Like PCA, grasping this technique will allow us to simplify complex data structures, thereby aiding in more effective data interpretation and decision-making.

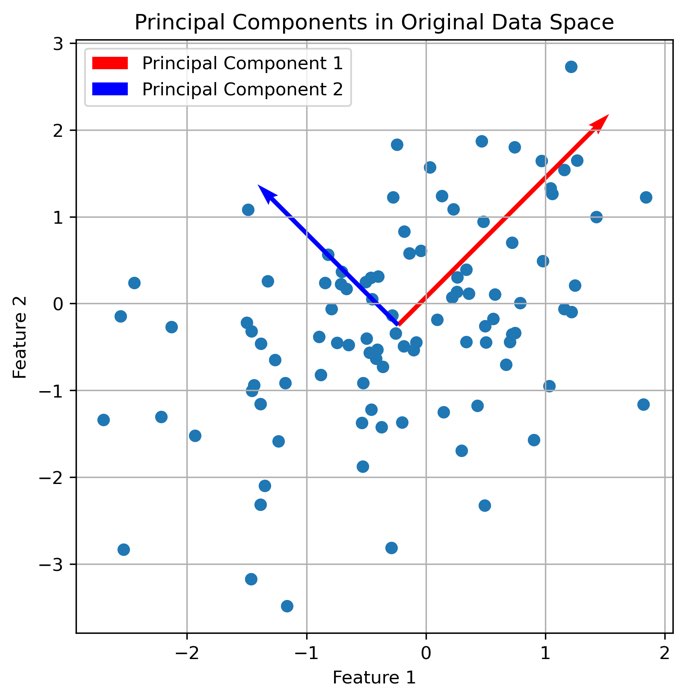

Untangling complexity: harnessing PCA for data dimensionality reduction

This tutorial explores the use of Principal Component Analysis (PCA), a powerful tool for reducing the complexity of high-dimensional data. By delving into both the theoretical underpinnings and practical Python applications, we illuminate how PCA can reveal hidden structures within data and make it more manageable for analysis.

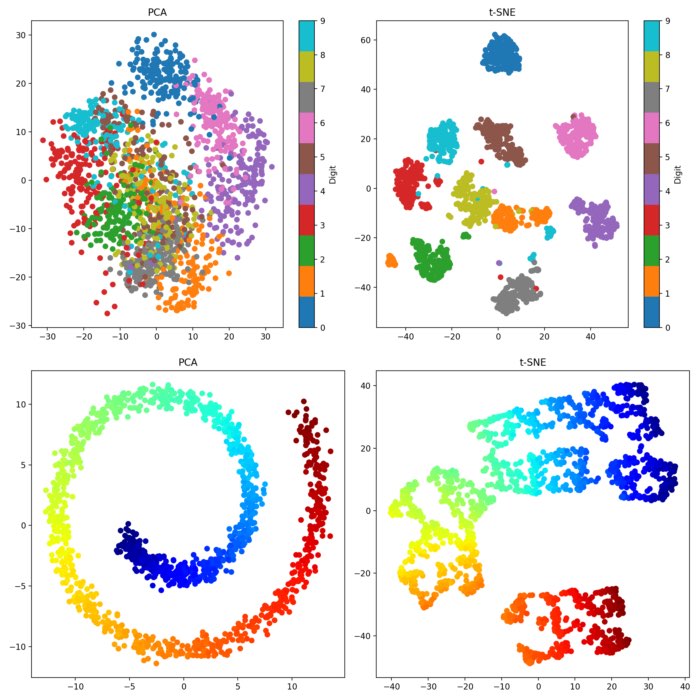

t-SNE and PCA: Two powerful tools for data exploration

Dimensionality reduction techniques play a vital role in both data exploration and visualization. Among these techniques, t-SNE and PCA are widely used and offer valuable insights into complex datasets. In this blog post, we explore te mathematical background of both methods, compare their methodologies, and discuss their advantages and disadvantages. Additionally, we take a look at their practical implementation in Python and compare the results on different sample datasets.