Assessing animal behavior with machine learning: New DeepLabCut tutorial

I have added a hands-on tutorial to the Assessing Animal Behavior lecture. The tutorial covers the GUI-based use of DeepLabCutꜛ, a popular open-source software package for markerless pose estimation of animals. DeepLabCut uses deep learning to track the movements of animals in videos, enabling the study of behavior in a high-throughput and multi-modal fashion.

DeepLabCut logo. Source: deeplabcut.github.ioꜛ

DeepLabCut logo. Source: deeplabcut.github.ioꜛ

The tutorial provides a step-by-step guide of a default workflow, from setting up a project to labeling training data, training a neural network, and analyzing the results. The tutorial aims to introduce students to cutting-edge methods for assessing animal behavior and to provide them with practical experience in using machine learning tools for behavioral analysis.

The target group is neuroscience students with no or little programming knowledge. The tutorial will enable them to use DeepLabCut for their own research projects and to gain insights into the possibilities and limitations of machine learning-based behavioral analysis.

Please, feel free to share the tutorial with students or colleagues who might be interested in using DeepLabCut for their own projects.

Example applications of DeepLabCut: multi-animal arena tracking, mouse whiskers tracking, mouse pupil tracking, mouse paw tracking, and mouse locomotion tracking. Of course, other animals and body parts can be tracked as well (even human body parts). Source: deeplabcut.github.ioꜛ

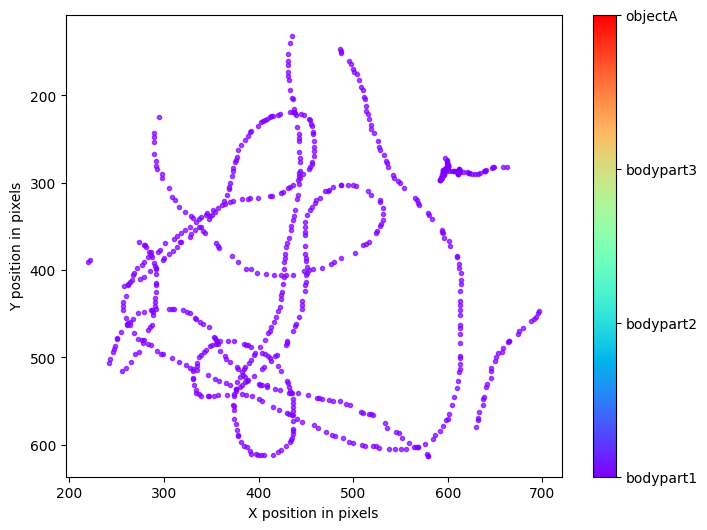

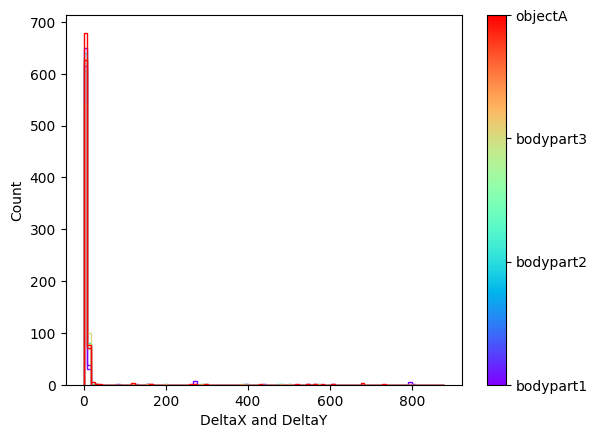

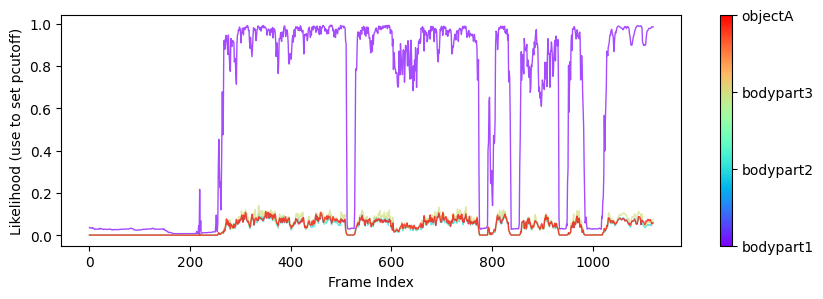

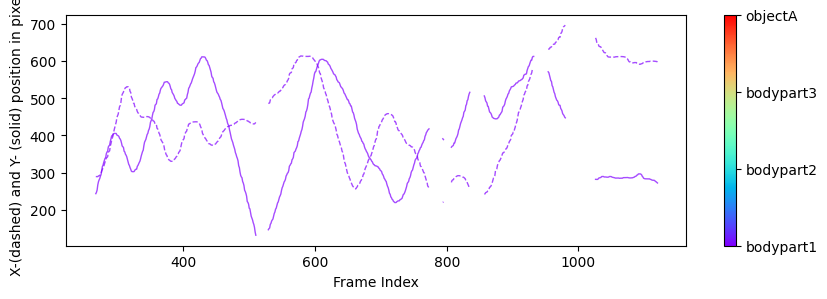

Plots from one example “plot-poses” folder, showing the trajectories of the tracked body parts, a histogram of the tracked body parts’ x- and y-coordinates, the likelihood of the predictions as a function of frame index, and the filtered x- and y-coordinates of the tracked body parts (showing which coordinates are below a certain threshold pcutoff and, thus, set to missing).

Further readings

- DeepLabCut websiteꜛ

- DeepLabCut documentationꜛ

- DeepLabCut User Guide (for single animal projects)ꜛ

- Github repository of DeepLabCutꜛ

- Mathis, A., Mamidanna, P., Cury, K.M. et al., DeepLabCut: markerless pose estimation of user-defined body parts with deep learning, Nat Neurosci 21, 1281–1289 (2018). doi: 10.1038/s41593-018-0209-yꜛ

- Nath, T., Mathis, A., Chen, A.C. et al., Using DeepLabCut for 3D markerless pose estimation across species and behaviors, Nat Protoc 14, 2152–2176 (2019). doi: 10.1038/s41596-019-0176-0ꜛ

- Lauer, J., Zhou, M., Ye, S. et al., Multi-animal pose estimation, identification and tracking with DeepLabCut, Nat Methods 19, 496–504 (2022). doi: 10.1038/s41592-022-01443-0ꜛ

comments